;

}

location / {

proxy_pass http://127.0.0.1:8001/;

proxy_set_header Host $host;

}

```

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1590183272,"How to redirect from ""/"" to a specific db/table",

https://github.com/simonw/datasette/issues/2030#issuecomment-1440814680,https://api.github.com/repos/simonw/datasette/issues/2030,1440814680,IC_kwDOBm6k_c5V4RZY,1350673,dmick,2023-02-22T21:22:42Z,2023-02-22T21:22:42Z,NONE,"@gk7279, you had asked in a separate bug about how to redirect web servers in general. The datasette docs actually have pretty good information on this for both nginx and apache2: https://docs.datasette.io/en/stable/deploying.html#running-datasette-behind-a-proxy

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1594383280,How to use Datasette with apache webserver on GCP?,

https://github.com/simonw/datasette/issues/2030#issuecomment-1440854834,https://api.github.com/repos/simonw/datasette/issues/2030,1440854834,IC_kwDOBm6k_c5V4bMy,19700859,gk7279,2023-02-22T21:54:39Z,2023-02-22T21:54:39Z,NONE,Thanks @dmick . I chose to create a firewall rule under my GCP to open the port of interest and datasette works. ,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1594383280,How to use Datasette with apache webserver on GCP?,

https://github.com/simonw/datasette/issues/2035#issuecomment-1460808028,https://api.github.com/repos/simonw/datasette/issues/2035,1460808028,IC_kwDOBm6k_c5XEilc,1176293,ar-jan,2023-03-08T20:14:47Z,2023-03-08T20:14:47Z,NONE,"+1, I have been wishing for this feature (also for use with template-sql). It was requested before here #1304.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1615692818,Potential feature: special support for `?a=1&a=2` on the query page,

https://github.com/simonw/datasette/issues/2054#issuecomment-1499797384,https://api.github.com/repos/simonw/datasette/issues/2054,1499797384,IC_kwDOBm6k_c5ZZReI,6213,dsisnero,2023-04-07T00:46:50Z,2023-04-07T00:46:50Z,NONE,you should have a look at Roda written in ruby . ,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1657861026,"Make detailed notes on how table, query and row views work right now",

https://github.com/simonw/datasette/issues/2058#issuecomment-1506203550,https://api.github.com/repos/simonw/datasette/issues/2058,1506203550,IC_kwDOBm6k_c5Zxtee,547438,cephillips,2023-04-13T01:48:21Z,2023-04-13T01:48:21Z,NONE,Really interesting how you are using ChatGPT in this.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1663399821,"500 ""attempt to write a readonly database"" error caused by ""PRAGMA schema_version""",

https://github.com/simonw/datasette/issues/2058#issuecomment-1507264934,https://api.github.com/repos/simonw/datasette/issues/2058,1507264934,IC_kwDOBm6k_c5Z1wmm,1138559,esagara,2023-04-13T16:35:21Z,2023-04-13T16:35:21Z,NONE,"I tried deploying the fix you submitted, but still getting the same errors. I can past the logs here if needed, but I really don't see anything new in them.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1663399821,"500 ""attempt to write a readonly database"" error caused by ""PRAGMA schema_version""",

https://github.com/simonw/datasette/issues/2067#issuecomment-1532304714,https://api.github.com/repos/simonw/datasette/issues/2067,1532304714,IC_kwDOBm6k_c5bVR1K,39538958,justmars,2023-05-03T00:16:03Z,2023-05-03T00:16:03Z,NONE,"Curiously, after running commands on the database that was litestream-restored, datasette starts to work again, e.g.

```sh

litestream restore -o data/db.sqlite s3://mytestbucketxx/db

datasette data/db.sqlite

# fails (OperationalError described above)

```

```sh

litestream restore -o data/db.sqlite s3://mytestbucketxx/db

sqlite-utils enable-wal data/db.sqlite

datasette data/db.sqlite

# works

```

```sh

litestream restore -o data/db.sqlite s3://mytestbucketxx/db

sqlite-utils optimize data/db.sqlite

datasette data/db.sqlite

# works

```

```sh

litestream restore -o data/db.sqlite s3://mytestbucketxx/db

sqlite3 data/db.sqlite "".clone test.db""

datasette test.db

# works

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1690765434,Litestream-restored db: errors on 3.11 and 3.10.8; but works on py3.10.7 and 3.10.6,

https://github.com/simonw/datasette/issues/2069#issuecomment-1537277919,https://api.github.com/repos/simonw/datasette/issues/2069,1537277919,IC_kwDOBm6k_c5boP_f,31861128,yqlbu,2023-05-07T03:17:35Z,2023-05-07T03:17:35Z,NONE,"Some updates:

I notice that there is an option in the CLI where we can explicitly set `immutable` mode when spinning up the server

```console

Options:

-i, --immutable PATH Database files to open in immutable mode

```

Then, the question is - how can I disable immutable mode in the deployed instance on Vercel?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1698865182,[BUG] Cannot insert new data to deployed instance,

https://github.com/simonw/datasette/issues/2087#issuecomment-1636134091,https://api.github.com/repos/simonw/datasette/issues/2087,1636134091,IC_kwDOBm6k_c5hhWzL,653549,adarshp,2023-07-14T17:02:03Z,2023-07-14T17:02:03Z,NONE,"@asg017 - the docs say that the autodetection only occurs in configuration directory mode. I for one would also be interested in the `--settings settings.json` feature.

For context, I am developing a large database for use with Datasette, but the database lives in a different network volume than my source code, since the volume in which my source code lives is aggressively backed up, while the location where the database lives is meant for temporary files and is not as aggressively backed up (since the backups would get unreasonably large).","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1765870617,`--settings settings.json` option,

https://github.com/simonw/datasette/issues/2093#issuecomment-1616195496,https://api.github.com/repos/simonw/datasette/issues/2093,1616195496,IC_kwDOBm6k_c5gVS-o,273509,terinjokes,2023-07-02T00:06:54Z,2023-07-02T00:07:17Z,NONE,"I'm not keen on requiring metadata to be within the database. I commonly have multiple DBs, from various sources, and having one config file to provide the metadata works out very well. I use Datasette with databases where I'm not the original source, needing to mutate them to add a metadata table or sqlite-docs makes me uncomfortable.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1781530343,"Proposal: Combine settings, metadata, static, etc. into a single `datasette.yaml` File",

https://github.com/simonw/datasette/issues/2105#issuecomment-1642013043,https://api.github.com/repos/simonw/datasette/issues/2105,1642013043,IC_kwDOBm6k_c5h3yFz,2235371,aki-k,2023-07-19T12:41:36Z,2023-07-19T12:41:36Z,NONE,"The same problem can be seen in the links:

Advanced export

JSON shape: default, array, newline-delimited, object","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1811824307,When reverse proxying datasette with nginx an URL element gets erronously added,

https://github.com/simonw/datasette/issues/2105#issuecomment-1643873232,https://api.github.com/repos/simonw/datasette/issues/2105,1643873232,IC_kwDOBm6k_c5h-4PQ,2235371,aki-k,2023-07-20T12:53:00Z,2023-07-20T12:53:34Z,NONE,"I forgot to add that I followed these instructions to set up the python llm:

https://simonwillison.net/2023/Jul/18/accessing-llama-2/","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1811824307,When reverse proxying datasette with nginx an URL element gets erronously added,

https://github.com/simonw/datasette/issues/2105#issuecomment-1646247246,https://api.github.com/repos/simonw/datasette/issues/2105,1646247246,IC_kwDOBm6k_c5iH71O,2235371,aki-k,2023-07-21T21:16:37Z,2023-07-21T21:17:09Z,NONE,"I must be doing something wrong. On page https://192.168.1.3:5432/datasette-llm/logs/_llm_migrations there's the option to filter the results, there's an Apply button. It also tries to load an URL with an extra URL element in it:

https://192.168.1.3:5432/datasette-llm/datasette-llm/logs/_llm_migrations?_sort=name&name__contains=initial","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1811824307,When reverse proxying datasette with nginx an URL element gets erronously added,

https://github.com/simonw/datasette/issues/2123#issuecomment-1723362847,https://api.github.com/repos/simonw/datasette/issues/2123,1723362847,IC_kwDOBm6k_c5muG4f,6523121,hueyy,2023-09-18T13:02:46Z,2023-09-18T13:02:46Z,NONE,"Can confirm that this bug can be reproduced as follows:

```

docker run datasetteproject/datasette datasette serve --reload

```

which produces the following output:

> Starting monitor for PID 10.

> Error: Invalid value for '[FILES]...': Path 'serve' does not exist.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1825007061,datasette serve when invoked with --reload interprets the serve command as a file,

https://github.com/simonw/datasette/issues/2126#issuecomment-1666912107,https://api.github.com/repos/simonw/datasette/issues/2126,1666912107,IC_kwDOBm6k_c5jWw9r,36199671,ctsrc,2023-08-06T16:27:34Z,2023-08-06T16:27:34Z,NONE,"And in similar fashion, how can I assign the `edit-tiddlywiki` permission to my user `myuser` in `metadata.yml` / `metadata.json`?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1838266862,Permissions in metadata.yml / metadata.json,

https://github.com/simonw/datasette/issues/2126#issuecomment-1674242356,https://api.github.com/repos/simonw/datasette/issues/2126,1674242356,IC_kwDOBm6k_c5jyuk0,36199671,ctsrc,2023-08-11T05:52:29Z,2023-08-11T05:52:29Z,NONE,"I see :) yeah, I’m on the stable version installed from homebrew on macOS","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1838266862,Permissions in metadata.yml / metadata.json,

https://github.com/simonw/datasette/issues/2133#issuecomment-1671649530,https://api.github.com/repos/simonw/datasette/issues/2133,1671649530,IC_kwDOBm6k_c5jo1j6,54462,HaveF,2023-08-09T15:41:14Z,2023-08-09T15:41:14Z,NONE,"Yes, using this approach(`datasette install -r requirements.txt`) will result in more consistency.

I'm curious about the results of the `datasette plugins --all` command. Where will we use the output of this command? Will it include configuration information for these plugins in the future? If so, will we need to consider the configuration of these plugins in addition to installing them on different computers?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1841501975,[feature request]`datasette install plugins.json` options,

https://github.com/simonw/datasette/issues/2133#issuecomment-1672360472,https://api.github.com/repos/simonw/datasette/issues/2133,1672360472,IC_kwDOBm6k_c5jrjIY,54462,HaveF,2023-08-10T00:31:24Z,2023-08-10T00:31:24Z,NONE,"It looks very nice now.

Finally, no more manual installation of plugins one by one. Thank you, Simon! ❤️

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1841501975,[feature request]`datasette install plugins.json` options,

https://github.com/simonw/datasette/issues/2143#issuecomment-1685259985,https://api.github.com/repos/simonw/datasette/issues/2143,1685259985,IC_kwDOBm6k_c5kcwbR,11784304,dvizard,2023-08-20T11:27:21Z,2023-08-20T11:27:21Z,NONE,"To chime in from a poweruser perspective: I'm worried that this is an overengineering trap. Yes, the current solution is somewhat messy. But there are datasette-wide settings, there are database-scope settings, there are table-scope settings etc, but then there are database-scope metadata and table-scope metadata. Trying to cleanly separate ""settings"" from ""configuration"" is, I believe, an uphill fight. Even separating db/table-scope settings from pure descriptive metadata is not always easy. Like, do canned queries belong to database metadata or to settings? Do I need two separate files for this?

One pragmatic solution I used in a project is stacking yaml configuration files. Basically, have an arbitrary number of yaml or json settings files that you load in a specified order. Every file adds to the corresponding settings in the earlier-loaded file (if it already existed). I implemented this myself but found later that there is an existing Python ""cascading dict"" type of thing, I forget what it's called. There is a bit of a challenge deciding whether there is ""replacement"" or ""addition"" (I think I pragmatically ran `update` on the second level of the dict but better solutions are certainly possible).

This way, one allows separation of settings into different blocks, while not imposing a specific idea of what belongs where that might not apply equally to all cases.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1855885427,De-tangling Metadata before Datasette 1.0,

https://github.com/simonw/datasette/issues/2143#issuecomment-1685260244,https://api.github.com/repos/simonw/datasette/issues/2143,1685260244,IC_kwDOBm6k_c5kcwfU,11784304,dvizard,2023-08-20T11:29:00Z,2023-08-20T11:29:00Z,NONE,https://docs.python.org/3/library/collections.html#collections.ChainMap,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1855885427,De-tangling Metadata before Datasette 1.0,

https://github.com/simonw/datasette/issues/2143#issuecomment-1685260624,https://api.github.com/repos/simonw/datasette/issues/2143,1685260624,IC_kwDOBm6k_c5kcwlQ,11784304,dvizard,2023-08-20T11:31:16Z,2023-08-20T11:31:16Z,NONE,https://pypi.org/project/deep-chainmap/,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1855885427,De-tangling Metadata before Datasette 1.0,

https://github.com/simonw/datasette/issues/2143#issuecomment-1685263948,https://api.github.com/repos/simonw/datasette/issues/2143,1685263948,IC_kwDOBm6k_c5kcxZM,11784304,dvizard,2023-08-20T11:50:10Z,2023-08-20T11:50:10Z,NONE,"This also makes it simple to separate out secrets.

`datasette --config settings.yaml --config secrets.yaml --config db-docs.yaml --config db-fixtures.yaml`

settings.yaml

```

settings:

default_page_size: 10

max_returned_rows: 3000

sql_time_limit_ms"": 8000

plugins:

datasette-ripgrep:

path: /usr/local/lib/python3.11/site-packages

```

secrets.yaml

```

plugins:

datasette-auth-github:

client_secret: SUCH_SECRET

```

db-docs.yaml

```

databases:

docs:

permissions:

create-table:

id: editor

```

db-fixtures.yaml

```

databases:

fixtures:

tables:

no_primary_key:

hidden: true

queries:

neighborhood_search:

sql: |-

select neighborhood, facet_cities.name, state

from facetable join facet_cities on facetable.city_id = facet_cities.id

where neighborhood like '%' || :text || '%' order by neighborhood;

title: Search neighborhoods

description_html: |-

This demonstrates basic LIKE search

```","{""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1855885427,De-tangling Metadata before Datasette 1.0,

https://github.com/simonw/datasette/issues/2143#issuecomment-1690799608,https://api.github.com/repos/simonw/datasette/issues/2143,1690799608,IC_kwDOBm6k_c5kx434,77071,pkulchenko,2023-08-24T00:09:47Z,2023-08-24T00:10:41Z,NONE,"@simonw, FWIW, I do exactly the same thing for one of my projects (both to allow multiple configuration files to be passed on the command line and setting individual values) and it works quite well for me and my users. I even use the same parameter name for both (https://studio.zerobrane.com/doc-configuration#configuration-via-command-line), but I understand why you may want to use different ones for files and individual values. There is one small difference that I accept code snippets, but I don't think it matters much in this case.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1855885427,De-tangling Metadata before Datasette 1.0,

https://github.com/simonw/datasette/issues/2143#issuecomment-1691094870,https://api.github.com/repos/simonw/datasette/issues/2143,1691094870,IC_kwDOBm6k_c5kzA9W,1238873,rclement,2023-08-24T06:43:40Z,2023-08-24T06:43:40Z,NONE,"If I may, the ""path-like"" configuration is great but one thing that would be even greater: allowing the same configuration to be provided using environment variables.

For instance:

```

datasette -s plugins.datasette-complex-plugin.configs '{""foo"": [1,2,3], ""bar"": ""baz""}'

```

could also be provided using:

```

export DS_PLUGINS_DATASETTE-COMPLEX-PLUGIN_CONFIGS='{""foo"": [1,2,3], ""bar"": ""baz""}'

datasette

```

(I do not like mixing `-` and `_` in env vars but I do not have a best easily reversible example at the moment)

FYI, you could take some inspiration from another great open source data project, Metabase:

https://www.metabase.com/docs/latest/configuring-metabase/config-file

https://www.metabase.com/docs/latest/configuring-metabase/environment-variables","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1855885427,De-tangling Metadata before Datasette 1.0,

https://github.com/simonw/datasette/issues/2145#issuecomment-1685471752,https://api.github.com/repos/simonw/datasette/issues/2145,1685471752,IC_kwDOBm6k_c5kdkII,77071,pkulchenko,2023-08-21T01:07:23Z,2023-08-21T01:07:23Z,NONE,"@simonw, since you're referencing ""rowid"" column by name, I just want to note that there may be an existing rowid column with completely different semantics (https://www.sqlite.org/lang_createtable.html#rowid), which is likely to break this logic. I don't see a good way to detect a proper ""rowid"" name short of checking if there is a field with that name and using the alternative (`_rowid_` or `oid`), which is not ideal, but may work.

In terms of the original issue, maybe a way to deal with it is to use rowid by default and then use primary key for WITHOUT ROWID tables (as they are guaranteed to be not null), but I suspect it may require significant changes to the API (and doesn't fully address the issue of what value to pass to indicate NULL when editing records). Would it make sense to generate a random string to indicate NULL values when editing?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1857234285,If a row has a primary key of `null` various things break,

https://github.com/simonw/datasette/issues/2147#issuecomment-1687433388,https://api.github.com/repos/simonw/datasette/issues/2147,1687433388,IC_kwDOBm6k_c5klDCs,18899,jackowayed,2023-08-22T05:05:33Z,2023-08-22T05:05:33Z,NONE,"Thanks for all this! You're totally right that the ASGI option is doable, if a bit low level and coupled to the current URI design. I'm totally fine with that being the final answer.

process_view is interesting and in the general direction of what I had in mind.

A somewhat less powerful idea: Is there value in giving a hook for just the query that's about to be run? Maybe I'm thinking a little narrowly about this problem I decided I wanted to solve, but I could see other uses for a hook of the sketch below:

```

def prepare_query(database, table, query):

""""""Modify query that is about to be run in some way. Return the (possibly rewritten) query to run, or None to disallow running the query""""""

```

(Maybe you actually want to return a tuple so there can be an error message when you disallow, or something.)

Maybe it's too narrowly useful and some of the other pieces of datasette obviate some of these ideas, but off the cuff I could imagine using it to:

* Require a LIMIT. Either fail the query or add the limit if it's not there.

* Do logging, like my usecase.

* Do other analysis on whether you want to allow the query to run; a linter? query complexity?

Definitely feel free to say no, or not now. This is all me just playing around with what datasette and its plugin architecture can do with toy ideas, so don't let me push you to commit to a hook you don't feel confident fits well in the design.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1858228057,Plugin hook for database queries that are run,

https://github.com/simonw/datasette/issues/2147#issuecomment-1690955706,https://api.github.com/repos/simonw/datasette/issues/2147,1690955706,IC_kwDOBm6k_c5kye-6,18899,jackowayed,2023-08-24T03:54:35Z,2023-08-24T03:54:35Z,NONE,"That's fair. The best idea I can think of is that if a plugin wanted to limit intensive queries, it could add LIMITs or something. A hook that gives you visibility of queries and maybe the option to reject felt a little more limited than the existing plugin hooks, so I was trying to think of what else one might want to do while looking at to-be-run queries.

But without a real motivating example, I see why you don't want to add that.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1858228057,Plugin hook for database queries that are run,

https://github.com/simonw/datasette/issues/215#issuecomment-540548765,https://api.github.com/repos/simonw/datasette/issues/215,540548765,MDEyOklzc3VlQ29tbWVudDU0MDU0ODc2NQ==,2181410,clausjuhl,2019-10-10T12:27:56Z,2019-10-10T12:27:56Z,NONE,"Hi Simon. Any news on the ability to add routes (with static content) to datasette? As a public institution I'm required to have at least privacy, cookie and availability policies in place, and it really would be nice to have these under the same url. Thank you for some great work!","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",314506669,Allow plugins to define additional URL routes and views,

https://github.com/simonw/datasette/issues/2189#issuecomment-1728192688,https://api.github.com/repos/simonw/datasette/issues/2189,1728192688,IC_kwDOBm6k_c5nAiCw,173848,yozlet,2023-09-20T17:53:31Z,2023-09-20T17:53:31Z,NONE,"`/me munches popcorn at a furious rate, utterly entralled`","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1901416155,Server hang on parallel execution of queries to named in-memory databases,

https://github.com/simonw/datasette/issues/2196#issuecomment-1760560526,https://api.github.com/repos/simonw/datasette/issues/2196,1760560526,IC_kwDOBm6k_c5o8AWO,1892194,Olshansk,2023-10-13T00:07:07Z,2023-10-13T00:07:07Z,NONE,That worked!,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",1910269679,Discord invite link returns 401,

https://github.com/simonw/datasette/issues/2214#issuecomment-1844819002,https://api.github.com/repos/simonw/datasette/issues/2214,1844819002,IC_kwDOBm6k_c5t9bQ6,2874,precipice,2023-12-07T07:36:33Z,2023-12-07T07:36:33Z,NONE,"If I uncheck `expand labels` in the Advanced CSV export dialog, the error does not occur. Re-checking that box and re-running the export does cause the error to occur.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",2029908157,CSV export fails for some `text` foreign key references,

https://github.com/simonw/datasette/issues/227#issuecomment-439194286,https://api.github.com/repos/simonw/datasette/issues/227,439194286,MDEyOklzc3VlQ29tbWVudDQzOTE5NDI4Ng==,222245,carlmjohnson,2018-11-15T21:20:37Z,2018-11-15T21:20:37Z,NONE,I'm diving back into https://salaries.news.baltimoresun.com and what I really want is the ability to inject the request into my context.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",315960272,prepare_context() plugin hook,

https://github.com/simonw/datasette/issues/236#issuecomment-1033772902,https://api.github.com/repos/simonw/datasette/issues/236,1033772902,IC_kwDOBm6k_c49nh9m,1376648,jordaneremieff,2022-02-09T13:40:52Z,2022-02-09T13:40:52Z,NONE,"Hi @simonw,

I've received some inquiries over the last year or so about Datasette and how it might be supported by [Mangum](https://github.com/jordaneremieff/mangum). I maintain Mangum which is, as far as I know, the only project that provides support for ASGI applications in AWS Lambda.

If there is anything that I can help with here, please let me know because I think what Datasette provides to the community (even beyond OSS) is noble and worthy of special consideration.","{""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",317001500,datasette publish lambda plugin,

https://github.com/simonw/datasette/issues/236#issuecomment-1465208436,https://api.github.com/repos/simonw/datasette/issues/236,1465208436,IC_kwDOBm6k_c5XVU50,545193,sopel,2023-03-12T14:04:15Z,2023-03-12T14:04:15Z,NONE,"I keep coming back to this in search for the related exploration, so I'll just link it now:

@simonw has meanwhile researched _how to deploy Datasette to AWS Lambda using function URLs and Mangum_ via https://github.com/simonw/public-notes/issues/6 and concluded _that's everything I need to know in order to build a datasette-publish-lambda plugin_.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",317001500,datasette publish lambda plugin,

https://github.com/simonw/datasette/issues/236#issuecomment-920543967,https://api.github.com/repos/simonw/datasette/issues/236,920543967,IC_kwDOBm6k_c423mLf,164214,sethvincent,2021-09-16T03:19:08Z,2021-09-16T03:19:08Z,NONE,":wave: I just put together a small example using the lambda container image support: https://github.com/sethvincent/datasette-aws-lambda-example

It uses mangum and AWS's [python runtime interface client](https://github.com/aws/aws-lambda-python-runtime-interface-client) to handle the lambda event stuff.

I'd be happy to help with a publish plugin for AWS lambda as I plan to use this for upcoming projects.

The example uses the [serverless](https://www.serverless.com) cli for deployment but there might be a more suitable deployment approach for the plugin. It would be cool if users didn't have to install anything additional other than the aws cli and its associated config/credentials setup.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",317001500,datasette publish lambda plugin,

https://github.com/simonw/datasette/issues/247#issuecomment-390689406,https://api.github.com/repos/simonw/datasette/issues/247,390689406,MDEyOklzc3VlQ29tbWVudDM5MDY4OTQwNg==,11912854,jsancho-gpl,2018-05-21T15:29:31Z,2018-05-21T15:29:31Z,NONE,"I've changed my mind about the way to support external connectors aside of SQLite and I'm working in a more simple style that respects the original Datasette, i.e. less refactoring. I present you [a version of Datasette wich supports other database connectors](https://github.com/jsancho-gpl/datasette/tree/external-connectors) and [a Datasette connector for HDF5/PyTables files](https://github.com/jsancho-gpl/datasette-pytables).","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",319449852,SQLite code decoupled from Datasette,

https://github.com/simonw/datasette/issues/254#issuecomment-388367027,https://api.github.com/repos/simonw/datasette/issues/254,388367027,MDEyOklzc3VlQ29tbWVudDM4ODM2NzAyNw==,247131,philroche,2018-05-11T13:41:46Z,2018-05-11T13:41:46Z,NONE,"An example deployment @ https://datasette-zkcvlwdrhl.now.sh/simplestreams-270f20c/cloudimage?content_id__exact=com.ubuntu.cloud%3Areleased%3Adownload

It is not causing errors, more of an inconvenience. I have worked around it using a `like` query instead. ","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",322283067,Escaping named parameters in canned queries,

https://github.com/simonw/datasette/issues/254#issuecomment-626340387,https://api.github.com/repos/simonw/datasette/issues/254,626340387,MDEyOklzc3VlQ29tbWVudDYyNjM0MDM4Nw==,247131,philroche,2020-05-10T14:54:13Z,2020-05-10T14:54:13Z,NONE,"This has now been resolved and is not present in current version of datasette.

Sample query @simonw mentioned now returns as expected.

https://aggreg8streams.tinyviking.ie/simplestreams?sql=select+*+from+cloudimage+where+%22content_id%22+%3D+%22com.ubuntu.cloud%3Areleased%3Adownload%22+order+by+id+limit+10","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",322283067,Escaping named parameters in canned queries,

https://github.com/simonw/datasette/issues/260#issuecomment-1051473892,https://api.github.com/repos/simonw/datasette/issues/260,1051473892,IC_kwDOBm6k_c4-rDfk,596279,zaneselvans,2022-02-26T02:24:15Z,2022-02-26T02:24:15Z,NONE,"Is there already functionality that can be used to validate the `metadata.json` file? Is there a JSON Schema that defines it? Or a validation that's available via datasette with Python? We're working on [automatically building the metadata](https://github.com/catalyst-cooperative/pudl/pull/1479) in CI and when we deploy to cloud run, and it would be nice to be able to check whether the the metadata we're outputting is valid in our tests.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323223872,Validate metadata.json on startup,

https://github.com/simonw/datasette/issues/260#issuecomment-1235079469,https://api.github.com/repos/simonw/datasette/issues/260,1235079469,IC_kwDOBm6k_c5JndEt,596279,zaneselvans,2022-09-02T05:24:59Z,2022-09-02T05:24:59Z,NONE,@zschira is working with Pydantic while converting between and validating JSON frictionless datapackage descriptors that annotate an SQLite DB ([extracted from FERC's XBRL data](https://github.com/catalyst-cooperative/ferc-xbrl-extractor)) and the Datasette YAML metadata [so we can publish them with Datasette](https://github.com/catalyst-cooperative/pudl/pull/1831). Maybe there's some overlap? We've been loving Pydantic.,"{""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 1}",323223872,Validate metadata.json on startup,

https://github.com/simonw/datasette/issues/265#issuecomment-392890045,https://api.github.com/repos/simonw/datasette/issues/265,392890045,MDEyOklzc3VlQ29tbWVudDM5Mjg5MDA0NQ==,231923,yschimke,2018-05-29T18:37:49Z,2018-05-29T18:37:49Z,NONE,"Just about to ask for this! Move this page https://github.com/simonw/datasette/wiki/Datasettes

into a datasette, with some concept of versioning as well.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323677499,Add links to example Datasette instances to appropiate places in docs,

https://github.com/simonw/datasette/issues/267#issuecomment-414860009,https://api.github.com/repos/simonw/datasette/issues/267,414860009,MDEyOklzc3VlQ29tbWVudDQxNDg2MDAwOQ==,78156,annapowellsmith,2018-08-21T23:57:51Z,2018-08-21T23:57:51Z,NONE,"Looks to me like hashing, redirects and caching were documented as part of https://github.com/simonw/datasette/commit/788a542d3c739da5207db7d1fb91789603cdd336#diff-3021b0e065dce289c34c3b49b3952a07 - so perhaps this can be closed? :tada:","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323716411,"Documentation for URL hashing, redirects and cache policy",

https://github.com/simonw/datasette/issues/268#issuecomment-789409126,https://api.github.com/repos/simonw/datasette/issues/268,789409126,MDEyOklzc3VlQ29tbWVudDc4OTQwOTEyNg==,649467,mhalle,2021-03-03T03:57:15Z,2021-03-03T03:58:40Z,NONE,"In FTS5, I think doing an FTS search is actually much easier than doing a join against the main table like datasette does now. In fact, FTS5 external content tables provide a transparent interface back to the original table or view.

Here's what I'm currently doing:

* build a view that joins whatever tables I want and rename the columns to non-joiny names (e.g, `chapter.name AS chapter_name` in the view where needed)

* Create an FTS5 table with `content=""viewname""`

* As described in the ""external content tables"" section (https://www.sqlite.org/fts5.html#external_content_tables), sql queries can be made directly to the FTS table, which behind the covers makes select calls to the content table when the content of the original columns are needed.

* In addition, you get ""rank"" and ""bm25()"" available to you when you select on the _fts table.

Unfortunately, datasette doesn't currently seem happy being coerced into doing a real query on an fts5 table. This works:

```select col1, col2, col3 from table_fts where coll1=""value"" and table_fts match escape_fts(""search term"") order by rank```

But this doesn't work in the datasette SQL query interface:

```select col1, col2, col3 from table_fts where coll1=""value"" and table_fts match escape_fts(:search) order by rank``` (the ""search"" input text field doesn't show up)

For what datasette is doing right now, I think you could just use contentless fts5 tables (`content=""""`), since all you care about is the rowid since all you're doing a subselect to get the rowid anyway. In fts5, that's just a contentless table.

I guess if you want to follow this suggestion, you'd need a somewhat different code path for fts5.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323718842,Mechanism for ranking results from SQLite full-text search,

https://github.com/simonw/datasette/issues/268#issuecomment-790257263,https://api.github.com/repos/simonw/datasette/issues/268,790257263,MDEyOklzc3VlQ29tbWVudDc5MDI1NzI2Mw==,649467,mhalle,2021-03-04T03:20:23Z,2021-03-04T03:20:23Z,NONE,"It's kind of an ugly hack, but you can try out what using the fts5 table as an actual datasette-accessible table looks like without changing any datasette code by creating yet another view on top of the fts5 table:

`create view proxyview as select *, rank, table_fts as fts from table_fts;`

That's now visible from datasette, just like any other view, but you can use `fts match escape_fts(search_string) order by rank`.

This is only good as a proof of concept because you're inefficiently going from view -> fts5 external content table -> view -> data table. However, it does show it works.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323718842,Mechanism for ranking results from SQLite full-text search,

https://github.com/simonw/datasette/issues/268#issuecomment-876428348,https://api.github.com/repos/simonw/datasette/issues/268,876428348,MDEyOklzc3VlQ29tbWVudDg3NjQyODM0OA==,9308268,rayvoelker,2021-07-08T13:13:12Z,2021-07-08T13:13:12Z,NONE,"I had setup a full text search on my instance of Datasette for title data for our public library, and was noticing that some of the features of the SQLite FTS weren't working as expected ... and maybe the issue is in the `escape_fts()` function

vs removing the function...

Also, on the issue of sorting by rank by default .. perhaps something like this could work for the baked-in default SQL query for Datasette?

[link to the above search in my instance of Datasette](https://ilsweb.cincinnatilibrary.org/collection-analysis/current_collection-87a9011?sql=with+fts_search+as+%28%0D%0A++select%0D%0A++rowid%2C%0D%0A++rank%0D%0A++++from%0D%0A++++++bib_fts%0D%0A++++where%0D%0A++++++bib_fts+match+%3Asearch%0D%0A%29%0D%0A%0D%0Aselect%0D%0A++%0D%0A++bib_record_num%2C%0D%0A++creation_date%2C%0D%0A++record_last_updated%2C%0D%0A++isbn%2C%0D%0A++best_author%2C%0D%0A++best_title%2C%0D%0A++publisher%2C%0D%0A++publish_year%2C%0D%0A++bib_level_callnumber%2C%0D%0A++indexed_subjects%0D%0Afrom%0D%0A++fts_search%0D%0A++join+bib+on+bib.rowid+%3D+fts_search.rowid%0D%0A++%0D%0Aorder+by%0D%0Arank%0D%0A&search=black+death+NOT+fiction)","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323718842,Mechanism for ranking results from SQLite full-text search,

https://github.com/simonw/datasette/issues/268#issuecomment-876721585,https://api.github.com/repos/simonw/datasette/issues/268,876721585,MDEyOklzc3VlQ29tbWVudDg3NjcyMTU4NQ==,9308268,rayvoelker,2021-07-08T20:22:17Z,2021-07-08T20:22:17Z,NONE,"I do like the idea of there being a option for turning that on by default so that you could use those terms in the default ""Search"" bar presented when you browse to a table where FTS has been enabled. Maybe even a small inline pop up with a short bit explaining the FTS feature and the keywords (e.g. case matters). What are the side-effects of turning that on in the query string, or even by default as you suggested? I see that you stated in the docs... ""to ensure they do not cause any confusion for users who are not aware of them"", but I'm not sure what those could be.

Isn't it the case that those keywords are only picked up by sqlite in where you're using the MATCH clause?

Seems like a really powerful feature (even though there are a lot of hurdles around setting it up in the sqlite db ... sqlite-utils makes that so simple by the way!)","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",323718842,Mechanism for ranking results from SQLite full-text search,

https://github.com/simonw/datasette/issues/272#issuecomment-400571521,https://api.github.com/repos/simonw/datasette/issues/272,400571521,MDEyOklzc3VlQ29tbWVudDQwMDU3MTUyMQ==,647359,tomchristie,2018-06-27T07:30:07Z,2018-06-27T07:30:07Z,NONE,"I’m up for helping with this.

Looks like you’d need static files support, which I’m planning on adding a component for. Anything else obviously missing?

For a quick overview it looks very doable - the test client ought to me your test cases stay roughly the same.

Are you using any middleware or other components for the Sanic ecosystem? Do you use cookies or sessions at all?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",324188953,Port Datasette to ASGI,

https://github.com/simonw/datasette/issues/272#issuecomment-404514973,https://api.github.com/repos/simonw/datasette/issues/272,404514973,MDEyOklzc3VlQ29tbWVudDQwNDUxNDk3Mw==,647359,tomchristie,2018-07-12T13:38:24Z,2018-07-12T13:38:24Z,NONE,"Okay. I reckon the latest version should have all the kinds of components you'd need:

Recently added ASGI components for Routing and Static Files support, as well as making few tweaks to make sure requests and responses are instantiated efficiently.

Don't have any redirect-to-slash / redirect-to-non-slash stuff out of the box yet, which it looks like you might miss.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",324188953,Port Datasette to ASGI,

https://github.com/simonw/datasette/issues/272#issuecomment-418695115,https://api.github.com/repos/simonw/datasette/issues/272,418695115,MDEyOklzc3VlQ29tbWVudDQxODY5NTExNQ==,647359,tomchristie,2018-09-05T11:21:25Z,2018-09-05T11:21:25Z,NONE,"Some notes:

* Starlette just got a bump to 0.3.0 - there's some renamings in there. It's got enough functionality now that you can treat it either as a framework or as a toolkit. Either way the component design is all just *here's an ASGI app* all the way through.

* Uvicorn got a bump to 0.3.3 - Removed some cyclical references that were causing garbage collection to impact performance. Ought to be a decent speed bump.

* Wrt. passing config - Either use a single envvar that points to a config, or use multiple envvars for the config. Uvicorn could get a flag to read a `.env` file, but I don't see ASGI itself having a specific interface there.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",324188953,Port Datasette to ASGI,

https://github.com/simonw/datasette/issues/272#issuecomment-494297022,https://api.github.com/repos/simonw/datasette/issues/272,494297022,MDEyOklzc3VlQ29tbWVudDQ5NDI5NzAyMg==,647359,tomchristie,2019-05-21T08:39:17Z,2019-05-21T08:39:17Z,NONE,"Useful context stuff:

> ASGI decodes %2F encoded slashes in URLs automatically

`raw_path` for ASGI looks to be under consideration: https://github.com/django/asgiref/issues/87

> uvicorn doesn't support Python 3.5

That was an issue specifically against the <=3.5.2 minor point releases of Python, now resolved: https://github.com/encode/uvicorn/issues/330 👍

> Starlette for things like form parsing - but it's 3.6+ only!

Yeah - the bits that require 3.6 are anywhere with the ""async for"" syntax. If it wasn't for that I'd downport it, but that one's a pain. It's the one bit of syntax to watch out for if you're looking to bring any bits of implementation across to Datasette.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",324188953,Port Datasette to ASGI,

https://github.com/simonw/datasette/issues/276#issuecomment-744461856,https://api.github.com/repos/simonw/datasette/issues/276,744461856,MDEyOklzc3VlQ29tbWVudDc0NDQ2MTg1Ng==,296686,robintw,2020-12-14T14:04:57Z,2020-12-14T14:04:57Z,NONE,"I'm looking into using datasette with a database with spatialite geometry columns, and came across this issue. Has there been any progress on this since 2018?

In one of my tables I'm just storing lat/lon points in a spatialite point geometry, and I've managed to make datasette-cluster-map display the points by extracting the lat and lon in SQL - using something like `select ... ST_X(location) as longitude, ST_Y(location) as latitude from Blah`. Something more 'built-in' would be great though - particularly for the tables I have that store more complex geometries.","{""total_count"": 1, ""+1"": 1, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",324835838,Handle spatialite geometry columns better,

https://github.com/simonw/datasette/issues/283#issuecomment-780991910,https://api.github.com/repos/simonw/datasette/issues/283,780991910,MDEyOklzc3VlQ29tbWVudDc4MDk5MTkxMA==,9308268,rayvoelker,2021-02-18T02:13:56Z,2021-02-18T02:13:56Z,NONE,"I was going ask you about this issue when we talk during your office-hours schedule this Friday, but was there any support ever added for doing this cross-database joining?

I have a use-case where could be pretty neat to do analysis using this tool on time-specific databases from snapshots

https://ilsweb.cincinnatilibrary.org/collection-analysis/

and thanks again for such an amazing tool!","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",325958506,Support cross-database joins,

https://github.com/simonw/datasette/issues/283#issuecomment-789680230,https://api.github.com/repos/simonw/datasette/issues/283,789680230,MDEyOklzc3VlQ29tbWVudDc4OTY4MDIzMA==,605492,justinpinkney,2021-03-03T12:28:42Z,2021-03-03T12:28:42Z,NONE,"One note on using this pragma I got an error on starting datasette `no such table: pragma_database_list`.

I diagnosed this to an older version of sqlite3 (3.14.2) and upgrading to a newer version (3.34.2) fixed the issue.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",325958506,Support cross-database joins,

https://github.com/simonw/datasette/issues/316#issuecomment-398030903,https://api.github.com/repos/simonw/datasette/issues/316,398030903,MDEyOklzc3VlQ29tbWVudDM5ODAzMDkwMw==,132230,gavinband,2018-06-18T12:00:43Z,2018-06-18T12:00:43Z,NONE,"I should add that I'm using datasette version 0.22, Python 2.7.10 on Mac OS X. Happy to send more info if helpful.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",333238932,datasette inspect takes a very long time on large dbs,

https://github.com/simonw/datasette/issues/316#issuecomment-398109204,https://api.github.com/repos/simonw/datasette/issues/316,398109204,MDEyOklzc3VlQ29tbWVudDM5ODEwOTIwNA==,132230,gavinband,2018-06-18T16:12:45Z,2018-06-18T16:12:45Z,NONE,"Hi Simon,

Thanks for the response. Ok I'll try running `datasette inspect` up front.

In principle the db won't change. However, the site's in development and it's likely I'll need to add views and some auxiliary (smaller) tables as I go along. I will need to be careful with this if it involves an inspect step in each iteration, though.

g.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",333238932,datasette inspect takes a very long time on large dbs,

https://github.com/simonw/datasette/issues/321#issuecomment-399098080,https://api.github.com/repos/simonw/datasette/issues/321,399098080,MDEyOklzc3VlQ29tbWVudDM5OTA5ODA4MA==,12617395,bsilverm,2018-06-21T13:10:48Z,2018-06-21T13:10:48Z,NONE,"Perfect, thank you!!","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",334190959,Wildcard support in query parameters,

https://github.com/simonw/datasette/issues/321#issuecomment-399106871,https://api.github.com/repos/simonw/datasette/issues/321,399106871,MDEyOklzc3VlQ29tbWVudDM5OTEwNjg3MQ==,12617395,bsilverm,2018-06-21T13:39:37Z,2018-06-21T13:39:37Z,NONE,"One thing I've noticed with this approach is that the query is executed with no parameters which I do not believe was the case previously. In the case the table contains a lot of data, this adds some time executing the query before the user can enter their input and run it with the parameters they want.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",334190959,Wildcard support in query parameters,

https://github.com/simonw/datasette/issues/321#issuecomment-399129220,https://api.github.com/repos/simonw/datasette/issues/321,399129220,MDEyOklzc3VlQ29tbWVudDM5OTEyOTIyMA==,12617395,bsilverm,2018-06-21T14:45:02Z,2018-06-21T14:45:02Z,NONE,Those queries look identical. How can this be prevented if the queries are in a metadata.json file?,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",334190959,Wildcard support in query parameters,

https://github.com/simonw/datasette/issues/321#issuecomment-399173916,https://api.github.com/repos/simonw/datasette/issues/321,399173916,MDEyOklzc3VlQ29tbWVudDM5OTE3MzkxNg==,12617395,bsilverm,2018-06-21T17:00:10Z,2018-06-21T17:00:10Z,NONE,"Oh I see.. My issue is that the query executes with an empty string prior to the user submitting the parameters. I'll try adding your workaround to some of my queries. Thanks again,","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",334190959,Wildcard support in query parameters,

https://github.com/simonw/datasette/issues/327#issuecomment-1043609198,https://api.github.com/repos/simonw/datasette/issues/327,1043609198,IC_kwDOBm6k_c4-NDZu,208018,dholth,2022-02-17T23:21:36Z,2022-02-17T23:33:01Z,NONE,"On fly.io. This particular database goes from 1.4GB to 200M. Slower, part of that might be having no `--inspect-file`?

```

$ datasette publish fly ... --generate-dir /tmp/deploy-this

...

$ mksquashfs large.db large.squashfs

$ rm large.db # don't accidentally put it in the image

$ cat Dockerfile

FROM python:3.8

COPY . /app

WORKDIR /app

ENV DATASETTE_SECRET 'xyzzy'

RUN pip install -U datasette

# RUN datasette inspect large.db --inspect-file inspect-data.json

ENV PORT 8080

EXPOSE 8080

CMD mount -o loop -t squashfs large.squashfs /mnt; datasette serve --host 0.0.0.0 -i /mnt/large.db --cors --port $PORT

```

It would also be possible to copy the file onto the ~6GB available on the ephemeral container filesystem on startup. A little against the spirit of the thing? On this example the whole docker image is 2.42 GB and the squashfs version is 1.14 GB.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",335200136,Explore if SquashFS can be used to shrink size of packaged Docker containers,

https://github.com/simonw/datasette/issues/327#issuecomment-1043626870,https://api.github.com/repos/simonw/datasette/issues/327,1043626870,IC_kwDOBm6k_c4-NHt2,208018,dholth,2022-02-17T23:37:24Z,2022-02-17T23:37:24Z,NONE,On second thought any kind of quick-to-decompress-on-startup could be helpful if we're paying for the container registry and deployment bandwidth but not ephemeral storage.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",335200136,Explore if SquashFS can be used to shrink size of packaged Docker containers,

https://github.com/simonw/datasette/issues/327#issuecomment-584657949,https://api.github.com/repos/simonw/datasette/issues/327,584657949,MDEyOklzc3VlQ29tbWVudDU4NDY1Nzk0OQ==,1055831,dazzag24,2020-02-11T14:21:15Z,2020-02-11T14:21:15Z,NONE,See https://github.com/simonw/datasette/issues/657 and my changes that allow datasette to load parquet files ,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",335200136,Explore if SquashFS can be used to shrink size of packaged Docker containers,

https://github.com/simonw/datasette/issues/328#issuecomment-1706701195,https://api.github.com/repos/simonw/datasette/issues/328,1706701195,IC_kwDOBm6k_c5lujGL,7983005,eric-burel,2023-09-05T14:10:39Z,2023-09-05T14:10:39Z,NONE,"Hey @simonw I hit the same issue as mentionned by @chmaynard on a fresh install, ""/mnt/fixtures.db"" doesn't seem to exist in the docker image","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",336464733,"Installation instructions, including how to use the docker image",

https://github.com/simonw/datasette/issues/328#issuecomment-427261369,https://api.github.com/repos/simonw/datasette/issues/328,427261369,MDEyOklzc3VlQ29tbWVudDQyNzI2MTM2OQ==,13698964,chmaynard,2018-10-05T06:37:06Z,2018-10-05T06:37:06Z,NONE,"```

~ $ docker pull datasetteproject/datasette

~ $ docker run -p 8001:8001 -v `pwd`:/mnt datasetteproject/datasette datasette -p 8001 -h 0.0.0.0 /mnt/fixtures.db

Usage: datasette -p [OPTIONS] [FILES]...

Error: Invalid value for ""files"": Path ""/mnt/fixtures.db"" does not exist.

```","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",336464733,"Installation instructions, including how to use the docker image",

https://github.com/simonw/datasette/issues/339#issuecomment-404576136,https://api.github.com/repos/simonw/datasette/issues/339,404576136,MDEyOklzc3VlQ29tbWVudDQwNDU3NjEzNg==,12617395,bsilverm,2018-07-12T16:45:08Z,2018-07-12T16:45:08Z,NONE,Thanks for the quick reply. Looks like that is working well.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",340396247,Expose SANIC_RESPONSE_TIMEOUT config option in a sensible way,

https://github.com/simonw/datasette/issues/352#issuecomment-584203999,https://api.github.com/repos/simonw/datasette/issues/352,584203999,MDEyOklzc3VlQ29tbWVudDU4NDIwMzk5OQ==,870184,xrotwang,2020-02-10T16:18:58Z,2020-02-10T16:18:58Z,NONE,"I don't want to re-open this issue, but I'm wondering whether it would be possible to include the full row for which a specific cell is to be rendered in the hook signature. My use case are rows where custom rendering would need access to multiple values (specifically, rows containing the constituents of interlinear glossed text (IGT) in separate columns, see https://github.com/cldf/cldf/tree/master/components/examples).

I could probably cobble this together with custom SQL and the sql-to-html plugin. But having a full row within a `render_cell` implementation seems a lot simpler.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",345821500,render_cell(value) plugin hook,

https://github.com/simonw/datasette/issues/393#issuecomment-451415063,https://api.github.com/repos/simonw/datasette/issues/393,451415063,MDEyOklzc3VlQ29tbWVudDQ1MTQxNTA2Mw==,1727065,ltrgoddard,2019-01-04T11:04:08Z,2019-01-04T11:04:08Z,NONE,Awesome - will get myself up and running on 0.26,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",395236066,"CSV export in ""Advanced export"" pane doesn't respect query",

https://github.com/simonw/datasette/issues/394#issuecomment-567127981,https://api.github.com/repos/simonw/datasette/issues/394,567127981,MDEyOklzc3VlQ29tbWVudDU2NzEyNzk4MQ==,132978,terrycojones,2019-12-18T17:18:06Z,2019-12-18T17:18:06Z,NONE,"Agreed, this would be nice to have. I'm currently working around it in `nginx` with additional location blocks:

```

location /datasette/ {

proxy_pass http://127.0.0.1:8001/;

proxy_redirect off;

include proxy_params;

}

location /dna-protein-genome/ {

proxy_pass http://127.0.0.1:8001/dna-protein-genome/;

proxy_redirect off;

include proxy_params;

}

location /rna-protein-genome/ {

proxy_pass http://127.0.0.1:8001/rna-protein-genome/;

proxy_redirect off;

include proxy_params;

}

```

The 2nd and 3rd above are my databases. This works, but I have a small problem with URLs like `/rna-protein-genome?params....` that I could fix with some more nginx munging. I seem to do this sort of thing once every 5 years and then have to look it all up again.

Thanks!","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",396212021,base_url configuration setting,

https://github.com/simonw/datasette/issues/394#issuecomment-567128636,https://api.github.com/repos/simonw/datasette/issues/394,567128636,MDEyOklzc3VlQ29tbWVudDU2NzEyODYzNg==,132978,terrycojones,2019-12-18T17:19:46Z,2019-12-18T17:19:46Z,NONE,"Hmmm, wait, maybe my mindless (copy/paste) use of `proxy_redirect` is causing me grief...","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",396212021,base_url configuration setting,

https://github.com/simonw/datasette/issues/394#issuecomment-567219479,https://api.github.com/repos/simonw/datasette/issues/394,567219479,MDEyOklzc3VlQ29tbWVudDU2NzIxOTQ3OQ==,132978,terrycojones,2019-12-18T21:24:23Z,2019-12-18T21:24:23Z,NONE,"@simonw What about allowing a base url. The `

View Datasette (Click on the stop button to close the Datasette server)

'))

# Launch Datasette

with Popen(

[

'python', '-m', 'datasette', '--',

database,

'--port', str(port),

'--config', f'base_url:{proxy_url}'

],

stdout=PIPE,

stderr=PIPE,

bufsize=1,

universal_newlines=True

) as p:

print(p.stdout.readline(), end='')

while True:

try:

line = p.stderr.readline()

if not line:

break

print(line, end='')

exit_code = p.poll()

except KeyboardInterrupt:

p.send_signal(SIGINT)

```

Ideally, I'd like some extra magic to notify users when they are leaving the closing the notebook tab and make them terminate the running datasette processes. I'll be looking for it.","{""total_count"": 1, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 1, ""rocket"": 0, ""eyes"": 0}",396212021,base_url configuration setting,

https://github.com/simonw/datasette/issues/401#issuecomment-455520561,https://api.github.com/repos/simonw/datasette/issues/401,455520561,MDEyOklzc3VlQ29tbWVudDQ1NTUyMDU2MQ==,1055831,dazzag24,2019-01-18T11:48:13Z,2019-01-18T11:48:13Z,NONE,"Thanks. I'll take a look at your changes.

I must admit I was struggling to see how to pass info from the python code in __init__.py into the javascript document.addEventListener function.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",400229984,How to pass configuration to plugins?,

https://github.com/simonw/datasette/issues/403#issuecomment-455752238,https://api.github.com/repos/simonw/datasette/issues/403,455752238,MDEyOklzc3VlQ29tbWVudDQ1NTc1MjIzOA==,1794527,ccorcos,2019-01-19T05:47:55Z,2019-01-19T05:47:55Z,NONE,Ah. That makes much more sense. Interesting approach.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",400511206,How does persistence work?,

https://github.com/simonw/datasette/issues/409#issuecomment-472844001,https://api.github.com/repos/simonw/datasette/issues/409,472844001,MDEyOklzc3VlQ29tbWVudDQ3Mjg0NDAwMQ==,43100,Uninen,2019-03-14T13:04:20Z,2019-03-14T13:04:42Z,NONE,It seems this affects the Datasette Publish -site as well: https://github.com/simonw/datasette-publish-support/issues/3,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",408376825,Zeit API v1 does not work for new users - need to migrate to v2,

https://github.com/simonw/datasette/issues/409#issuecomment-472875713,https://api.github.com/repos/simonw/datasette/issues/409,472875713,MDEyOklzc3VlQ29tbWVudDQ3Mjg3NTcxMw==,209967,michaelmcandrew,2019-03-14T14:14:39Z,2019-03-14T14:14:39Z,NONE,also linking this zeit issue in case it is helpful: https://github.com/zeit/now-examples/issues/163#issuecomment-440125769,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",408376825,Zeit API v1 does not work for new users - need to migrate to v2,

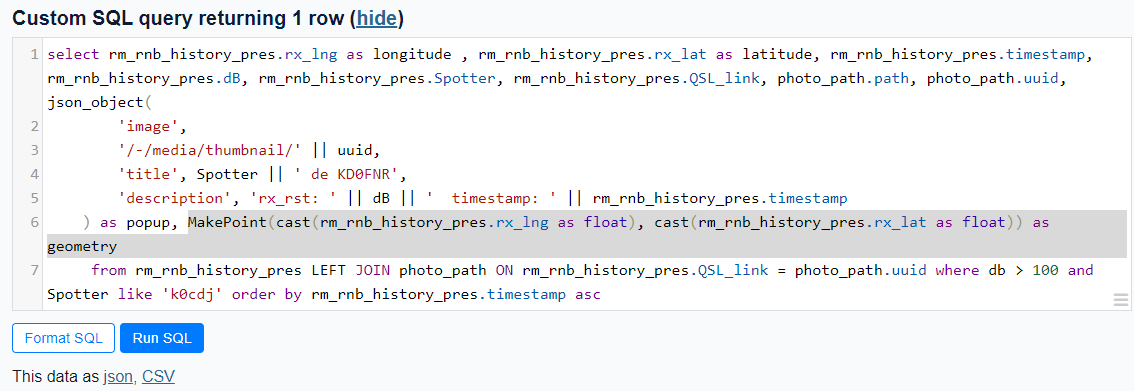

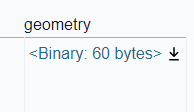

https://github.com/simonw/datasette/issues/411#issuecomment-1779267468,https://api.github.com/repos/simonw/datasette/issues/411,1779267468,IC_kwDOBm6k_c5qDXeM,363004,hcarter333,2023-10-25T13:23:04Z,2023-10-25T13:23:04Z,NONE,"Using the [Counties example](https://us-counties.datasette.io/counties/county_for_latitude_longitude?longitude=-122&latitude=37), I was able to pull out the MakePoint method as

MakePoint(cast(rm_rnb_history_pres.rx_lng as float), cast(rm_rnb_history_pres.rx_lat as float)) as geometry

which worked, giving me a geometry column.

gave

I believe it's the cast to float that does the trick. Prior to using the cast, I also received a 'wrong number of arguments' eror.

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",410384988,How to pass named parameter into spatialite MakePoint() function,

https://github.com/simonw/datasette/issues/411#issuecomment-519065799,https://api.github.com/repos/simonw/datasette/issues/411,519065799,MDEyOklzc3VlQ29tbWVudDUxOTA2NTc5OQ==,1055831,dazzag24,2019-08-07T12:00:36Z,2019-08-07T12:00:36Z,NONE,"Hi,

Apologies for the long delay.

I tried your suggesting escaping approach:

`SELECT a.pos AS rank, b.id, b.name, b.country, b.latitude AS latitude, b.longitude AS longitude, a.distance / 1000.0 AS dist_km FROM KNN AS a LEFT JOIN airports AS b ON (b.rowid = a.fid)WHERE f_table_name = 'airports' AND ref_geometry = MakePoint(:Long || "", "" || :Lat) AND max_items = 6;

`

and it returns this error:

`wrong number of arguments to function MakePoint()`

Anything else you suggest I try?

Thanks","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",410384988,How to pass named parameter into spatialite MakePoint() function,

https://github.com/simonw/datasette/issues/415#issuecomment-473217334,https://api.github.com/repos/simonw/datasette/issues/415,473217334,MDEyOklzc3VlQ29tbWVudDQ3MzIxNzMzNA==,36796532,ad-si,2019-03-15T09:30:57Z,2019-03-15T09:30:57Z,NONE,"Awesome, thanks! 😁 ","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",418329842,Add query parameter to hide SQL textarea,

https://github.com/simonw/datasette/issues/417#issuecomment-751127384,https://api.github.com/repos/simonw/datasette/issues/417,751127384,MDEyOklzc3VlQ29tbWVudDc1MTEyNzM4NA==,1279360,dyllan-to-you,2020-12-24T22:56:48Z,2020-12-24T22:56:48Z,NONE,"Instead of scanning the directory every 10s, have you considered listening for the native system events to notify you of updates?

I think python has a nice module to do this for you called [watchdog](https://pypi.org/project/watchdog/)","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",421546944,Datasette Library,

https://github.com/simonw/datasette/issues/417#issuecomment-751504136,https://api.github.com/repos/simonw/datasette/issues/417,751504136,MDEyOklzc3VlQ29tbWVudDc1MTUwNDEzNg==,212369,drewda,2020-12-27T19:02:06Z,2020-12-27T19:02:06Z,NONE,"Very much looking forward to seeing this functionality come together. This is probably out-of-scope for an initial release, but in the future it could be useful to also think of how to run this is a container'ized context. For example, an immutable datasette container that points to an S3 bucket of SQLite DBs or CSVs. Or an immutable datasette container pointing to a NFS volume elsewhere on a Kubernetes cluster.","{""total_count"": 2, ""+1"": 2, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",421546944,Datasette Library,

https://github.com/simonw/datasette/issues/483#issuecomment-495034774,https://api.github.com/repos/simonw/datasette/issues/483,495034774,MDEyOklzc3VlQ29tbWVudDQ5NTAzNDc3NA==,45919695,jcmkk3,2019-05-23T01:38:32Z,2019-05-23T01:43:04Z,NONE,"I think that location information is one of the other common pieces of hierarchical data. At least one that is general enough that extra dimensions could be auto-generated.

Also, I think this is an awesome project. Thank you for creating this.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",447408527,Option to facet by date using month or year,

https://github.com/simonw/datasette/issues/496#issuecomment-497885590,https://api.github.com/repos/simonw/datasette/issues/496,497885590,MDEyOklzc3VlQ29tbWVudDQ5Nzg4NTU5MA==,1740337,costrouc,2019-05-31T23:05:05Z,2019-05-31T23:05:05Z,NONE,"Upon doing a ""fix"" which allowed a longer build timeout the cloudrun container was too slow when it actually ran. So I would say if your sqlite database is over 1 GB heroku and cloudrun are not good options.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",450862577,Additional options to gcloud build command in cloudrun - timeout,

https://github.com/simonw/datasette/issues/498#issuecomment-499262397,https://api.github.com/repos/simonw/datasette/issues/498,499262397,MDEyOklzc3VlQ29tbWVudDQ5OTI2MjM5Nw==,7936571,chrismp,2019-06-05T21:28:32Z,2019-06-05T21:28:32Z,NONE,"Thinking about this more, I'd probably have to make a template page to go along with this, right? I'm guessing there's no way to add an all-databases-all-tables search to datasette's ""home page"" except by copying the ""home page"" template and editing it?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/498#issuecomment-501903071,https://api.github.com/repos/simonw/datasette/issues/498,501903071,MDEyOklzc3VlQ29tbWVudDUwMTkwMzA3MQ==,7936571,chrismp,2019-06-13T22:35:06Z,2019-06-13T22:35:06Z,NONE,"I'd like to start working on this. I've made a custom template for `index.html` that contains a `form` that contains a search `input`. But I'm not sure where to go from here. When user enters a search term, I'd like for that term to go into a function I'll make that will search all tables with full text search enabled.

Can I make additional custom Python scripts for this or must I edit datasette's files directly?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/498#issuecomment-504785662,https://api.github.com/repos/simonw/datasette/issues/498,504785662,MDEyOklzc3VlQ29tbWVudDUwNDc4NTY2Mg==,7936571,chrismp,2019-06-23T20:47:37Z,2019-06-23T20:47:37Z,NONE,"Very cool, thank you.

Using http://search-24ways.herokuapp.com as an example, let's say I want to search all FTS columns in all tables in all databases for the word ""web.""

[Here's a link](http://search-24ways.herokuapp.com/24ways-f8f455f?sql=select+count%28*%29from+articles+where+rowid+in+%28select+rowid+from+articles_fts+where+articles_fts+match+%3Asearch%29&search=web) to the query I'd need to run to search ""web"" on FTS columns in `articles` table of the `24ways` database.

And [here's a link](http://search-24ways.herokuapp.com/24ways-f8f455f.json?sql=select+count%28*%29from+articles+where+rowid+in+%28select+rowid+from+articles_fts+where+articles_fts+match+%3Asearch%29&search=web) to the JSON version of the above result. I'd like to get the JSON result of that query for each FTS table of each database in my datasette project.

Is it possible in Javascript to automate the construction of query URLs like the one I linked, but for every FTS table in my datasette project?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/498#issuecomment-505228873,https://api.github.com/repos/simonw/datasette/issues/498,505228873,MDEyOklzc3VlQ29tbWVudDUwNTIyODg3Mw==,7936571,chrismp,2019-06-25T00:21:17Z,2019-06-25T00:21:17Z,NONE,"Eh, I'm not concerned with a relevance score right now. I think I'd be fine with a search whose results show links to data tables with at least one result.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/498#issuecomment-506985050,https://api.github.com/repos/simonw/datasette/issues/498,506985050,MDEyOklzc3VlQ29tbWVudDUwNjk4NTA1MA==,7936571,chrismp,2019-06-29T20:28:21Z,2019-06-29T20:28:21Z,NONE,"In my case, I have an ever-growing number of databases and tables within them. Most tables have FTS enabled. I cannot predict the names of future tables and databases, nor can I predict the names of the columns for which I wish to enable FTS.

For my purposes, I was thinking of writing up something that sends these two GET requests to each of my databases' tables.

```

http://my-server.com/database-name/table-name.json?_search=mySearchString

http://my-server.com/database-name/table-name.json

```

In the resulting JSON strings, I'd check the value of the key `filtered_table_rows_count`. If the value is `0` in the first URL's result, or if values from both requests are the same, that means FTS is either disabled for the table or it has no rows matching the search query.

Is this feasible within the datasette library, or would it require some type of plugin? Or maybe you know of a better way of accomplishing this goal. Maybe I overlooked something.","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/498#issuecomment-508590397,https://api.github.com/repos/simonw/datasette/issues/498,508590397,MDEyOklzc3VlQ29tbWVudDUwODU5MDM5Nw==,7936571,chrismp,2019-07-04T23:34:41Z,2019-07-04T23:34:41Z,NONE,I'll take your suggestion and do this all in Javascript. Would I need to make a `static/` folder in my datasette project's root directory and make a custom `index.html` template that pulls from `static/js/search-all-fts.js`? Or would you suggest another way?,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/498#issuecomment-509042334,https://api.github.com/repos/simonw/datasette/issues/498,509042334,MDEyOklzc3VlQ29tbWVudDUwOTA0MjMzNA==,7936571,chrismp,2019-07-08T00:18:29Z,2019-07-08T00:18:29Z,NONE,@simonw I made this primitive search that I've put in my Datasette project's custom templates directory: https://gist.github.com/chrismp/e064b41f08208a6f9a93150a23cf7e03,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451513541,Full text search of all tables at once?,

https://github.com/simonw/datasette/issues/499#issuecomment-499260727,https://api.github.com/repos/simonw/datasette/issues/499,499260727,MDEyOklzc3VlQ29tbWVudDQ5OTI2MDcyNw==,7936571,chrismp,2019-06-05T21:22:55Z,2019-06-05T21:22:55Z,NONE,"I was thinking of having some kind of GUI in which regular reporters can upload a CSV and choose how to name the tables, columns and whatnot. Maybe it's possible to make such a GUI using Jinja template language? I ask because I'm unsure how to pursue this but I'd like to try. ","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",451585764,Accessibility for non-techie newsies? ,

https://github.com/simonw/datasette/issues/502#issuecomment-503237884,https://api.github.com/repos/simonw/datasette/issues/502,503237884,MDEyOklzc3VlQ29tbWVudDUwMzIzNzg4NA==,7936571,chrismp,2019-06-18T17:39:18Z,2019-06-18T17:46:08Z,NONE,It appears that I cannot reopen this issue but the proposed solution did not solve it. The link is not there. I have full text search enabled for a bunch of tables in my database and even clicking the link to reveal hidden tables did not show the download DB link.,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",453131917,Exporting sqlite database(s)?,

https://github.com/simonw/datasette/issues/506#issuecomment-500238035,https://api.github.com/repos/simonw/datasette/issues/506,500238035,MDEyOklzc3VlQ29tbWVudDUwMDIzODAzNQ==,1059677,Gagravarr,2019-06-09T19:21:18Z,2019-06-09T19:21:18Z,NONE,"If you don't mind calling out to Java, then Apache Tika is able to tell you what a load of ""binary stuff"" is, plus render it to XHTML where possible.

There's a python wrapper around the Apache Tika server, but for a more typical datasette usecase you'd probably just want to grab the Tika CLI jar, and call it with `--detect` and/or `--xhtml` to process the unknown binary blob","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",453846217,Option to display binary data,

https://github.com/simonw/datasette/issues/511#issuecomment-730893729,https://api.github.com/repos/simonw/datasette/issues/511,730893729,MDEyOklzc3VlQ29tbWVudDczMDg5MzcyOQ==,4060506,Carib0u,2020-11-20T06:35:13Z,2020-11-20T06:35:13Z,NONE,"Trying to run on Windows today, I get an error from the utils/asgi.py module.

It's trying `from os import EX_CANTCREAT` which is Unix-only. I commented this line out, and (so far) it's working. ","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",456578474,Get Datasette tests passing on Windows in GitHub Actions,

https://github.com/simonw/datasette/issues/512#issuecomment-503236800,https://api.github.com/repos/simonw/datasette/issues/512,503236800,MDEyOklzc3VlQ29tbWVudDUwMzIzNjgwMA==,7936571,chrismp,2019-06-18T17:36:37Z,2019-06-18T17:36:37Z,NONE,Oh I didn't know the `description` field could be used for a database's metadata. ,"{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",457147936,"""about"" parameter in metadata does not appear when alone",

https://github.com/simonw/datasette/issues/513#issuecomment-503249999,https://api.github.com/repos/simonw/datasette/issues/513,503249999,MDEyOklzc3VlQ29tbWVudDUwMzI0OTk5OQ==,7936571,chrismp,2019-06-18T18:11:36Z,2019-06-18T18:11:36Z,NONE,"Ah, so basically put the SQLite databases on Linode, for example, and run `datasette serve` on there? I'm comfortable with that. ","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",457201907,Is it possible to publish to Heroku despite slug size being too large?,

https://github.com/simonw/datasette/issues/514#issuecomment-504684709,https://api.github.com/repos/simonw/datasette/issues/514,504684709,MDEyOklzc3VlQ29tbWVudDUwNDY4NDcwOQ==,7936571,chrismp,2019-06-22T17:36:25Z,2019-06-22T17:36:25Z,NONE,"> WorkingDirectory=/path/to/data

@russss, Which directory does this represent?","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",459397625,Documentation with recommendations on running Datasette in production without using Docker,

https://github.com/simonw/datasette/issues/514#issuecomment-504685187,https://api.github.com/repos/simonw/datasette/issues/514,504685187,MDEyOklzc3VlQ29tbWVudDUwNDY4NTE4Nw==,7936571,chrismp,2019-06-22T17:43:24Z,2019-06-22T17:43:24Z,NONE,"> > > WorkingDirectory=/path/to/data

> >

> >

> > @russss, Which directory does this represent?

>

> It's the working directory (cwd) of the spawned process. In this case if you set it to the directory your data is in, you can use relative paths to the db (and metadata/templates/etc) in the `ExecStart` command.

In my case, on a remote server, I set up a virtual environment in `/home/chris/Env/datasette`, and when I activated that environment I ran `pip install datasette`.

My datasette project is in `/home/chris/datatsette-project`, so I guess I'd use that directory in the `WorkingDirectory` parameter?

And the `ExecStart` parameter would be `/home/chris/Env/datasette/lib/python3.7/site-packages/datasette serve -h 0.0.0.0 my.db` I'm guessing?

","{""total_count"": 0, ""+1"": 0, ""-1"": 0, ""laugh"": 0, ""hooray"": 0, ""confused"": 0, ""heart"": 0, ""rocket"": 0, ""eyes"": 0}",459397625,Documentation with recommendations on running Datasette in production without using Docker,

https://github.com/simonw/datasette/issues/514#issuecomment-504686266,https://api.github.com/repos/simonw/datasette/issues/514,504686266,MDEyOklzc3VlQ29tbWVudDUwNDY4NjI2Ng==,7936571,chrismp,2019-06-22T17:58:50Z,2019-06-23T21:21:57Z,NONE,"@russss

Actually, here's what I've got in `/etc/systemd/system/datasette.service`

```

[Unit]

Description=Datasette

After=network.target

[Service]

Type=simple

User=chris

WorkingDirectory=/home/chris/digital-library

ExecStart=/home/chris/Env/datasette/lib/python3.7/site-packages/datasette serve -h 0.0.0.0 databases/*.db --cors --metadata metadata.json

Restart=on-failure

[Install]

WantedBy=multi-user.target

```

I ran:

```

$ sudo systemctl daemon-reload

$ sudo systemctl enable datasette

$ sudo systemctl start datasette

```

Then I ran:

`$ journalctl -u datasette -f`

Got this message.

```